No, no, no. Not that kind. The interesting and exciting kind. Mathematical and statistical models!

TOF can hear your pulses quickening all the way up here in his Fortress of Solitude. Tell us more, TOF! (he hears you cry).

But you knew that the moretelling was going to happen, didn't you.

Some years ago, TOF read a book review, the title and author of which he has forgotten. Which is too bad because he now has an Amazon.com gift card and wouldn't mind buying it, if the price were right. The gist of it was a research team studying beach erosion who came to the conclusion that the actual erosion on the beaches they studied never matched the theoretical erosion produced by mathematical models of erosion. That's "never" as in "not ever, not even one time."

|

| Box-ing champion |

One marker of the Late Modern Age is a blind faith in Pythagorean number magic. Sometimes -- and TOF knows this is hard to believe -- the outputs of computer models are even referred to as "data." People who would believe that are subject to any number of other delusions and TOF knows of a bridge they could buy in Pennsylvania.

TOF tells you three times: the output of a model is supposed to be a prediction of a real world characteristic. That prediction will be wrong, but sometimes not too wrong, and the nature of its wrongness may lead to insights. But it is not in and of itself "data." Yet, so much of what is reported in the newsfog -- number of illegal immigrants from Patagonia, number of cases of Patagonian rat fever, and so on -- are actually the outputs of models, not the counting of noses. This is a great comfort to those who might otherwise be tasked with nasal enumeration. Building a model is done in safe, clean, and air-conditioned accommodations. Counting actual noses runs the risk of being bitten by Patagonian rats, or immigrants.*

(*) TOF recollects a computer model of illegal immigrants that, broken down by country-of-origin, predicted a negative amount of illegal Irish immigration. One can only imagine sons of the Gael slipping clandestinely from Maclean Ave. in the Bronx on their way to Tir na nOg. No one seemed to regard this as a deficiency in the model.

Models provide a drastic simplification of reality, and are in no sense accurate or true representations of real drainage systems [or whatever]. This is why they are wrong.

Simplicity and Complexity

So why model? Because waiting until after a new design is launched or the new policy promulgated is a heckuva time to discover that the design or policy sucks great horny toads. Modelling is a way to test a plan "on paper" before you have to "bend tin." However, for this very reason, the design of the model deserves painstaking attention, especially to the uncertainties that are always built into them. Otherwise, it's simply one more way to fool yourself. Believing your own PR is insane.Back in 1948, Dr. Warren Weaver defined the notions of simplicity and complexity in the sciences.

1. Organized simplicity describes a system characterized by a small number of significant variables tied together in deterministic relationships. Sometimes only one significant variable was involved, perhaps two; at most maybe four. The interactions and effects of these variables can be grasped analytically through mathematics.

A good example is Newton's Law of Universal Gravitation:

F= G(M*m)d²

Analytically, the only two variables are mass and distance. Physically, there are only two masses and one relationship (gravity). For three or more bodies, there is no analytical solution other than an infinite convergent series, and in practice one uses numerical methods of approximation. There is no term in the Law for "time," there is no term for "electrical charge," there is no term (as Laplace pointed out) for God. (Although that capital G looks suspicious.... LOL!)Now, physicists can get away with organized simplicity because they make simplifying assumptions: motion in a vacuum; perfectly elastic collisions; "ideal" gasses; infinite Euclidean 3-space; and so on. They abstract just that which is amenable to those Cartesian methods which, since the 17th century, are the only permissible ways of doing Science!™ And it works well enough in many cases.

But not all.

2. Disorganized Complexity. As the n-body problem illustrates, there are also systems characterized by a large

number of elements. Each of the many elements may be individually erratic, or even unknown. However, despite this "random" (recte: "unknown") individual behavior, the system as a whole possesses certain orderly and analyzable average properties. In effect, we can substitute a group average for the (possibly unknown) individual element values.

2. Disorganized Complexity. As the n-body problem illustrates, there are also systems characterized by a large

number of elements. Each of the many elements may be individually erratic, or even unknown. However, despite this "random" (recte: "unknown") individual behavior, the system as a whole possesses certain orderly and analyzable average properties. In effect, we can substitute a group average for the (possibly unknown) individual element values.Common examples include actuarial tables and gambling tables. The casino does not know in which roulette slot the little ball will drop. (Or, if they do, do not gamble in that casino.) But they can know with great precision the likelihood that the ball will drop into each slot. The same applies to things like insurance, quality control of manufactured product, thermodynamics in physics, etc.

But only if the complexity is disorganized; that is, the variation among elements is "random." It is precisely the randomness that makes statistical mechanics work. There is another kind of complexity that cannot be studied this way.

3. Organized complexity. Just as organized simplicity is often a simplification of disorganized complexity, so too is disorganized complexity a simplification of something more. We say a behavior is "random" when we do not know its causal structure or -- more likely -- the causes are multiple and individually of small account. When their effects add together, we get the famous normal distribution; when they multiply, we get the lognormal, and so on. Basically, we have agreed to focus only on the vital few and let these minor causes "vary as they vary." But sometimes that doesn't work.

Significant problems in biology, economics, and so on can seldom be characterized by two to four variables. It is impossible hold all the other variables constant. We wind up with a half-dozen, or even several dozen quantities, varying simultaneously in interconnected ways. Genomes or economies have a wholeness to them that a mass of gas or even a mass of policyholders do not. Weaver mentions such things as predicting the price of wheat, stabilizing a currency, controlling economic business cycles (lol), predicting behavior patterns of a labor union, a group of manufacturers, a racial minority, allocating resources to win a war (or avoid one), and so on.

These problems are too complex to handle with the 19th century mathematical techniques that were so successful on problems with two-to-four elements. But neither can they be handled with the statistical mechanics of the 20th century that worked on problems of disorganized complexity. You cannot take an average of heterogenous data.* This is the reason for the failure of things like socialism or the assumption that the underlying elements of evolution are random "like" thermodynamics. Genes are complex, but organized. They do not vary "at random."

(*) average of heterogenous data. Example: The average human being has roughly one testicle and one ovary. So what does that tell you? Or consider taking the average of a time series of automotive battery paste weights (shown here ) or of the aggregate temperatures from weather stations in different regions at different altitudes. What would such an average mean?

A third way of doing science was needed for problems of this type, as Friedrich August von Hayek noted in his Nobel acceptance speech:

Organized complexity... means that the character of the structures showing it depends not only on the properties of the individual elements of which they are composed, and the relative frequency with which they occur, but also on the manner in which the individual elements are connected with each other. In the explanation of the working of such structures we can for this reason not replace the information about the individual elements by statistical information, but require full information about each element if from our theory we are to derive specific predictions about individual events. Without such specific information about the individual elements we shall be confined to what on another occasion I have called mere pattern predictions - predictions of some of the general attributes of the structures that will form themselves, but not containing specific statements about the individual elements of which the structures will be made up. [Emph. added]

Von Hayek

-- Friedrich August von Hayek, "The Pretence of Knowledge"

The Third Way

|

| Yang and el-Haik, disguised as a book. |

The bad news is that in most complex situations, we cannot know the "full information about each element." Important factors may be unmeasured and even unmeasurable. Sometimes, if the information is the least little bit off, the calculated response may diverge enormously from the actual. This part of complexity theory is sometimes called "chaos theory" and little butterflies begin flapping their wings in Patagonia to cause storms in Kalamazoo.

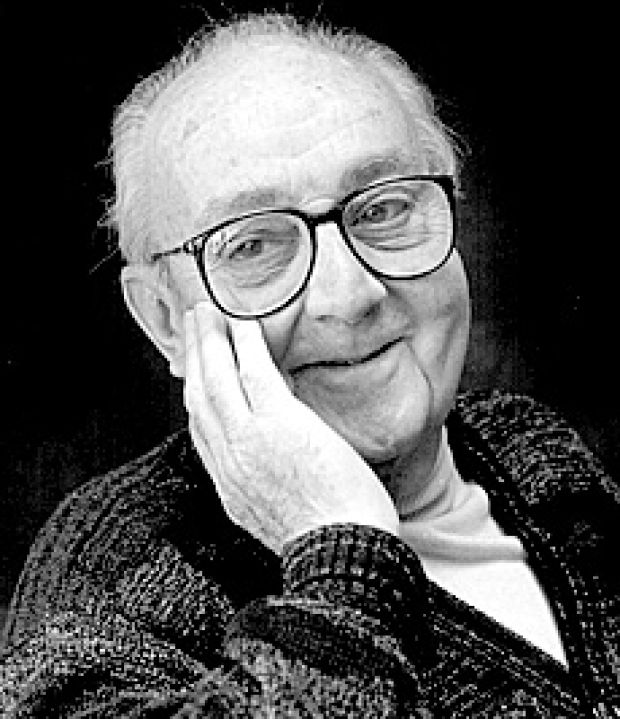

|

| Juran, a native of Transylvania and looking it. |

The good news is that even though real-world systems are multi-causal, a small subset of the contributing factors often accounts for the bulk of the response. This is known as the Pareto Principle, so-called by the late Joseph M. Juran. This is also called the 80-20 rule: 80% of the results are due to 20% of the contributors. For example, most of the rushing yardage gained in the NFL is gained by a small percentage of the running backs, who mostly seem to play for Seattle. (But let us not beat a dead horse.*) Most of the words used in documents comprise a small percentage of the words in the dictionary. (Compare how often "the" appears in this post to how often "numinous" appears.) The percentages need not be precisely 80% and 20%, of course. Forty US Metropolitan Statistical Areas account for 50% of the population of the USA. (Two metro areas -- NYC and LA -- account for one in ten Americans.)

(*) Dead horse. The reference is to the Seahawk-Bronco "super" bowl.

So sometimes we can handle organized complexity by identifying the "vital few" elements and model those, leaving the others "to vary as they vary." But that won't always work. A model of the US population based solely on the forty largest Metropolitan areas will overlook the fact that non-metropolitan areas -- rural, for example -- may behave very differently. In 1936, the US Presidential election was modeled by a massive sample, predicting a big victory for Alf Landon. But the model was predicated largely on a sample of telephone numbers and during the Great Depression, telephone ownership was correlated with greater wealth and therefore with different political inclinations.

So Weaver proposed in 1948 as the "Third Way" method for dealing with organized complexity, the methods of operations research pioneered during WWII coupled with the new-fangled "electronic calculating machines." In short, computer modeling.

Next time, we will look at How Models Go Bad.

Interesting, thanks for this. It's always fascinating, but makes me wonder: why didn't i learn any of this in 4 years of undergrad? You're right maybe i just slept through it!

ReplyDeleteI spent a lot of time in an Engineering curriculum, and only got one semester of Statistics.

DeleteThe instructor for modeling taught us a lot about modeling, and a little about the difference between results and data.

Still, I think much of this knowledge comes from experience comparing models to reality.

Are you missing a solidus in "F=G(m*M)d^2"?

ReplyDeleteJJB

He writes skiffy. In his universe(s), attraction becomes stronger as bodies move apart! ;-)

DeleteRob

A group of wealthy investors wanted to be able to predict the winner of the Superbowl. So they hired a group of biologists, a group of statisticians, and a group of physicists. Each group was given a year to research the issue. After one year, the groups all reported to the investors.

ReplyDelete.... Read the rest here. :)

http://wdmt.blogspot.com/2014/02/monday-joke.html

Would it be fair to say that modeling is an Art and not a Science, strictly speaking.

ReplyDeleteFor science seeks understanding of causation, and a Cause may be defined as an arrow pointing from one thing to another.

Art, on the other hand, seeks to achieve some practical goal, the result matters and not the understanding.

"They abstract just that which is amenable to those Cartesian methods which, since the 17th century, are the only permissible ways of doing Science!™"

ReplyDeleteNow that is funny!

Cool and macho character.

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDelete