Hey, ya ever see those brain image scans where they pretend they took snapshots of actual brain activity (rather than say model outputs of oxygen consumption is various regions)? Turns out there may be a slight problem:

"Functional MRI (fMRI) is 25 years old, yet surprisingly its most common statistical methods have not been validated using real data. Here, we used resting-state fMRI data from 499 healthy controls to conduct 3 million task group analyses. Using this null data with different experimental designs, we estimate the incidence of significant results. In theory, we should find 5% false positives (for a significance threshold of 5%), but instead we found that the most common software packages for fMRI analysis (SPM, FSL, AFNI) can result in false-positive rates of up to 70%. These results question the validity of some 40,000 fMRI studies and may have a large impact on the interpretation of neuroimaging results."

Cluster failure: Why fMRI inferences for spatial extent have inflated false-positive rates -- Eklund. Proceedings of the National Academy of Sciences of the United States of America

Well that explains why positive results were once obtained from a dead salmon. It does not explain why we keep getting positive results from neuroscientists, whose brains seem to light up at the thought of software highlighting regions in the brain. Esp. in the hypothalamus.

Well that explains why positive results were once obtained from a dead salmon. It does not explain why we keep getting positive results from neuroscientists, whose brains seem to light up at the thought of software highlighting regions in the brain. Esp. in the hypothalamus.

From the paper "Neural correlates of interspecies perspective taking in the post-mortem Atlantic Salmon: An argument for multiple comparisons correction," by Craig M. Bennett, et al. (2009)

INTRODUCTION

With the extreme dimensionality of functional neuroimaging data comes extreme risk for false positives. Across the 130,000 voxels in a typical fMRI volume the probability of a false positive is almost certain. Correction for multiple comparisons should be completed with these datasets, but is often ignored by investigators. To illustrate the magnitude of the problem we carried out a real experiment that demonstrates the danger of not correcting for chance properly.

METHODS

Subject. One mature Atlantic Salmon (Salmo salar) participated in the fMRI study. The salmon was approximately 18 inches long, weighed 3.8 lbs, and was not alive at the time of scanning.

TOF loves that droll last sentence, delivered with admirable deadpan.

|

| Graph from here |

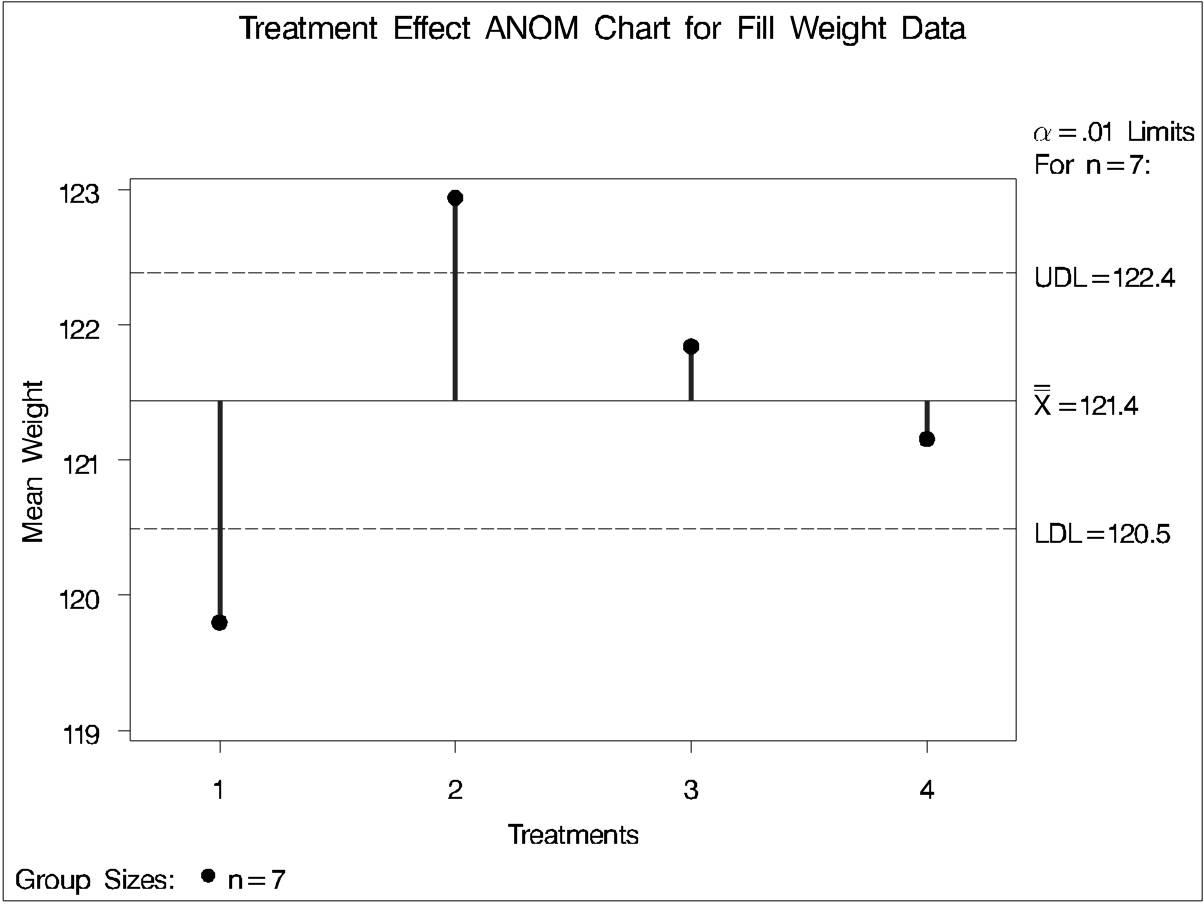

Suppose you have limits such that any one sample of size n has a 5% risk of falling beyond them for no particular reason. (We call that "random chance" but don't get carried away.) But now suppose you are comparing four group means, as in the illo above. Each one has a 5% risk of giving a false alarm and thus triggering a "wild goose chase" for an assignable cause even when there isn't one.

Assuming there is no actual cause to be found and the differences are due to sampling variation and to small variations due to a host of causes too small to be worth acting upon is called the Null Hypothesis. Nothing to see here. Move along. Notice that this doesn't mean there is nothing to see. It means you don't have sufficient evidence to make the Hunt for the Cause worthwhile.

With four means, the likelihood of a false alarm is the likelihood that at least one of the samples will provide a false alarm. That probability is the converse of the probability that none of them do so, which is 1 - 0.95^4 = 0.185. That is, our risk of a false alarm is 18% rather than 5% unless we compensate for the additional uncertainty.

The salmon-chasers and the authors of the new study linked above claim that similar corrections were not being made by the software when determining "significant" signals in fMRI scans, thus making it more likely that they will give false alarms. He who lives by the software dies by the software. It's always better to know what you are doing. Or at least, to know what your machines are doing.

_______________________________

h/t to Matt Briggs, Statistician to the Stars, for taking note of the paper and blogging about it on his site. He also links to previous posts of his about the new pherenology of fMRI.

Previous TOFian comments on fMRI are here:

|

Jul 19, 2012 ... The current sexy thing among the cognoscenti is the use of fMRI to "prove" that

there is no free will, a topic which, for some reason seems to ...

tofspot.blogspot.com

|

|

Aug 10, 2012 ... Here's a title for you, “BMI Not WHR Modulates BOLD fMRI Responses in a Sub-

Cortical Reward Network When Participants Judge the ...

tofspot.blogspot.com

|

|

Feb 23, 2013 ... It occurred to me that comparing fMRI (functional magnetic resonance imaging)

scans of the brain of an actor with those of an astronomer might ...

tofspot.blogspot.com

|

|

Apr 18, 2013 ... Especially lacking in statistical power are human neuroimaging studies—

especially those that use fMRI to infer brain activity—noted the ...

tofspot.blogspot.com

|

|

Man, that dead salmon never gets old! Figuratively, at least. Came up in a conversation this morning at work, and now here again.

ReplyDeleteIt really is the gift that keeps on giving.

DeleteFrom Matt Briggs:

ReplyDeleteNo, it’s “Cluster failure“. But one is tempted…One is sorely tempted.

Yes. Yes, I will confess I was tempted also.

I've always been partial to "cluster-flunk", when the stronger version has to be avoided.

DeleteEven before reading the post, I thought that top picture looked a little fishy ...

ReplyDelete